Due to the big evolution of the Internet in recent times, we live in a digital world. We are witnessing a phenomenal growth of social media platforms like Facebook, WhatsApp and Twitter. All these platforms come with chat functionality. Anyone can chat with anyone from any corner of the world in this day and age. Some people send toxic messages intentionally and in very rare cases, unintentionally as well (like typos or erroneous predictive texts etc). So how can we avoid this embarrassing situation since we all are prone to get toxic messages? Perspective API comes with a solution to protect us from toxic messages. It uses machine learning trained models to identify toxic messages in the backend. It's a very cool feature, right? This blog is going to narrate this in detail.

Hello Makers,

I hope you all are doing good. This is my first ever blog and. I am so excited and happy to share my knowledge and experience with all the makers around the world.

First off, let me introduce myself. My name is Vijayabharathi. I am an Advanced Certified Mendix Consultant and I am honored to be working with some of the best brains at MXTechies – APAC’s biggest Mendix Technology Service Provider! Now, let’s dive into the blog without further ado.

The agenda of the blog is:

1) Evolution of communication and why healthy conversations are really important.

2) Perspective API and how to consume Perspective API’s services in Mendix.

There’s a saying in Tamil by the ancient Tamil poet, Thiruvalluvar.

“Even if nothing else can be restrained, one must control his tongue; if not, he will suffer because of the harm inflicted by his words.”

Communication is extremely vital for everything and everyone on this planet, as we are all inter-dependant on each other, and only effective communication helps us to be cordial and co-exist in peace. However, it’s equally important to be very meticulous when we communicate. In the beginning, the conversations were man-to-man. In due course, they changed to letter and telephonic communication. After the digital revolution, Email and video chat communications became the order of the day. Perhaps, some giant company is currently working on Metaverse which is supposed to become the future of communication!

What is metaverse?

“In futurism and science fiction, the metaverse is a hypothetical iteration of the Internet as a single, universal, and immersive virtual world that is facilitated by the use of virtual reality and augmented reality headsets. In colloquial use, a metaverse is a network of 3D virtual worlds focused on social connection.”

Why healthy conversations are important?

For example, let us take gaming. Who doesn’t love to play games? In the 90’s, Arcade games like Super Mario, Contra, etc. were quite popular.

We didn’t have an option to chat with our friends. We could interact only with the characters in the game. After the evolution of games, we can play games with our friends through text, voice chat, etc. Popular games like Valorant and PUBG mobile have an option to report toxic players. Professional gamers stream their content through streaming platforms like Twitch, YouTube etc. Gaming streams are watched by a lot of kids. As per a survey, kids aged 16 to 24, watch gaming streams a lot. In group chats, some people may send toxic messages. Everyone watching the stream including the kids can be affected by these kinds of messages. In most streaming platforms, the Channel Moderator have an eye out for these kinds of toxic users. However, we can’t expect a moderator to spend most of his time to be the watchdog.

If we have the option to remove or block the toxic chats automatically, it will be of great help for both Streamers and Viewers. This is the point where Perspective API comes into place.

Perspective API:

Perspective API uses Machine Learning models to identify abusive comments. It will return a toxicity score based on the comments. If the toxic score is high, we can consider that comment toxic. Let’s dive deep into the integration part.

Here I have already created the sample Chat interface application using Mendix. To consume the services of Perspective API, we need an API key to perform further. Our efforts become highly crucial at this stage to achieve the desired results. Even Edison found the light bulb after 1000 unsuccessful attempts. Right?

https://en.wikipedia.org/wiki/Thomas_Edison

Click on the link below to go to that perspective site.

https://perspectiveapi.com/how-it-works/

Scroll down a little bit, you will see the Go to developer site button. Click on the button now.

https://developers.perspectiveapi.com/s/

Once you click on the link, it will open the developer site in a new tab. Next, click on the Get started button. It will redirect to the Perspective API documentation site. Here, you can see the step-by-step documentation to request access to the API key.

https://developers.perspectiveapi.com/s/docs-get-started

Pre-requisites: Google account.

If you don’t have a google account, click on the link below to create an account in Google. It takes 2 to 5 mins only.

https://support.google.com/mail/answer/56256?hl=en

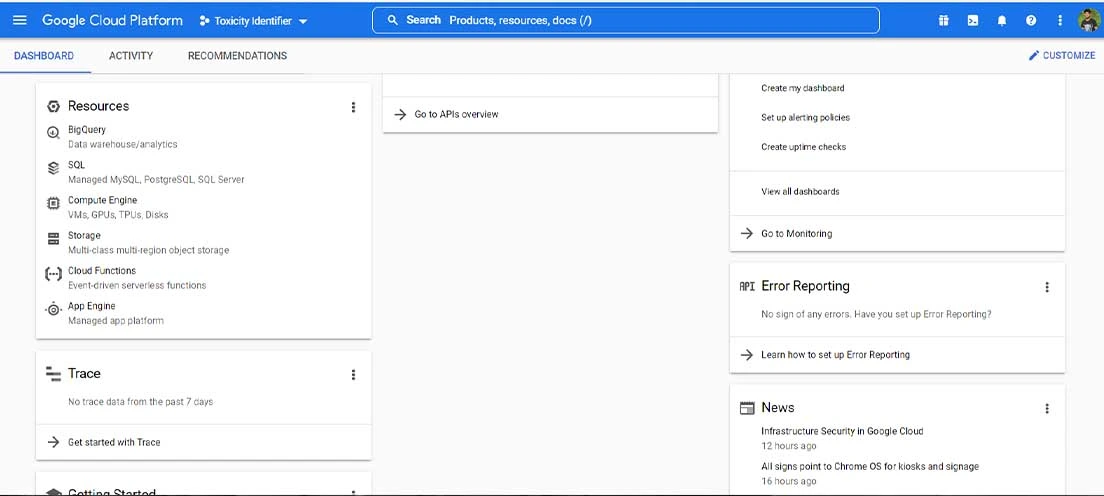

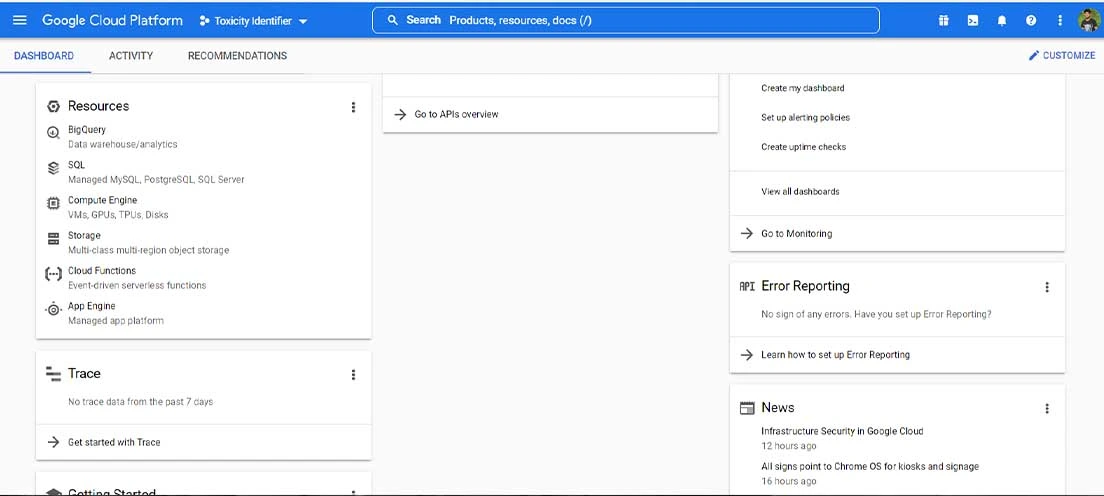

Once you have created a Google account, go to the Google cloud developer console dashboard page by clicking on this link.

https://console.cloud.google.com/home/dashboard?project=toxicity-identifier

Next, we need to create a project in our Google cloud platform to utilize Perspective comment analyzer API.

Steps are already provided on the Perspective API documentation page to create a GCP project.

Once you are done creating the project, click on the menu icon on the top left corner. it will open the side navigation menu. Click on APIs and Services Enabled APIs & Services.

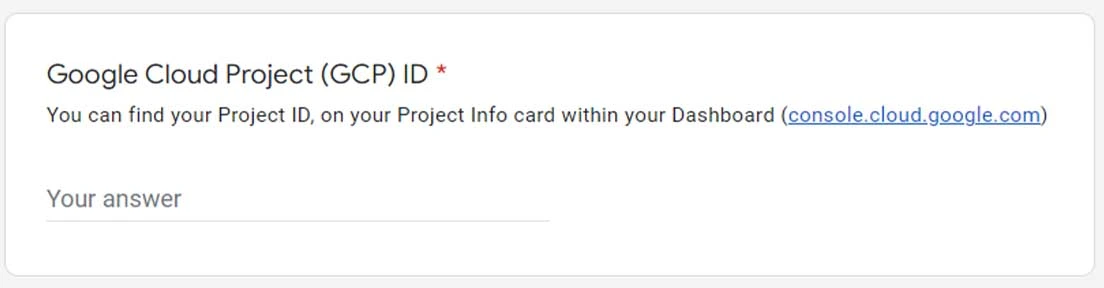

Next click on, + Enable API & Services button at the top of the navigation menu. Then search for Perspective Comment Analyzer API. Once, you found that service, Click and open the service to enable it. You may think, we have completed all the steps to get an API key. But this is not done yet. We need to fill the Perspective API request form to get an API key through the mail, within an hour’s time. Because it requires a GCP project ID with enabled Perspective comment analyzer API services. This is the major reason why we spend time on the Google cloud platform project.

Just click on this link below now to get your API key within an hour. https://docs.google.com/forms/d/e/1FAIpQLSdhBBnVVVbXSElby-jhNnEj-Zwpt5toQSCFsJerGfpXW66CuQ/viewform It’s done now. We have completed almost 50% of the work makers. Awesome & way to go! Now, let’s come back to our Mendix chat application!

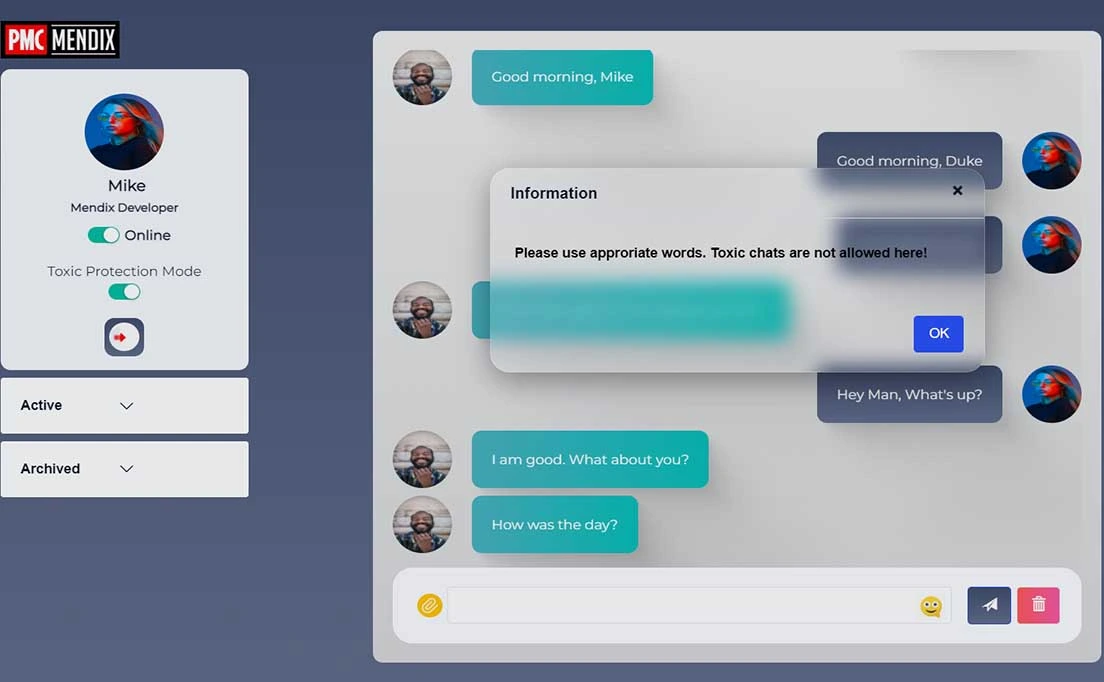

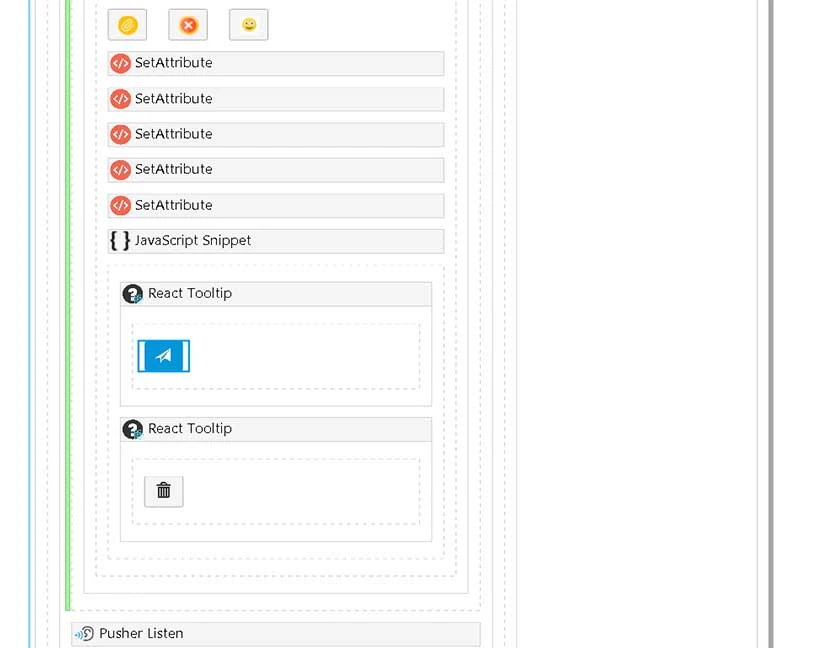

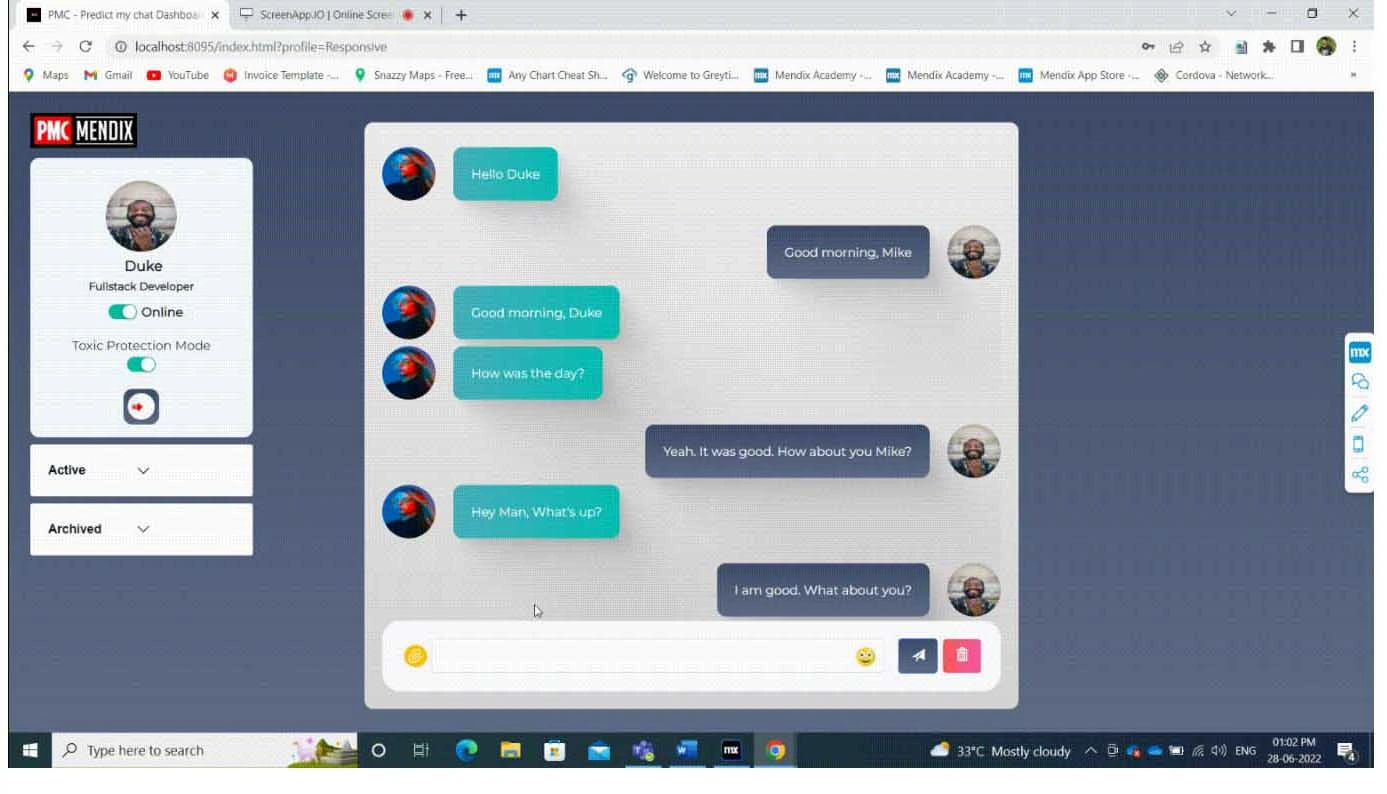

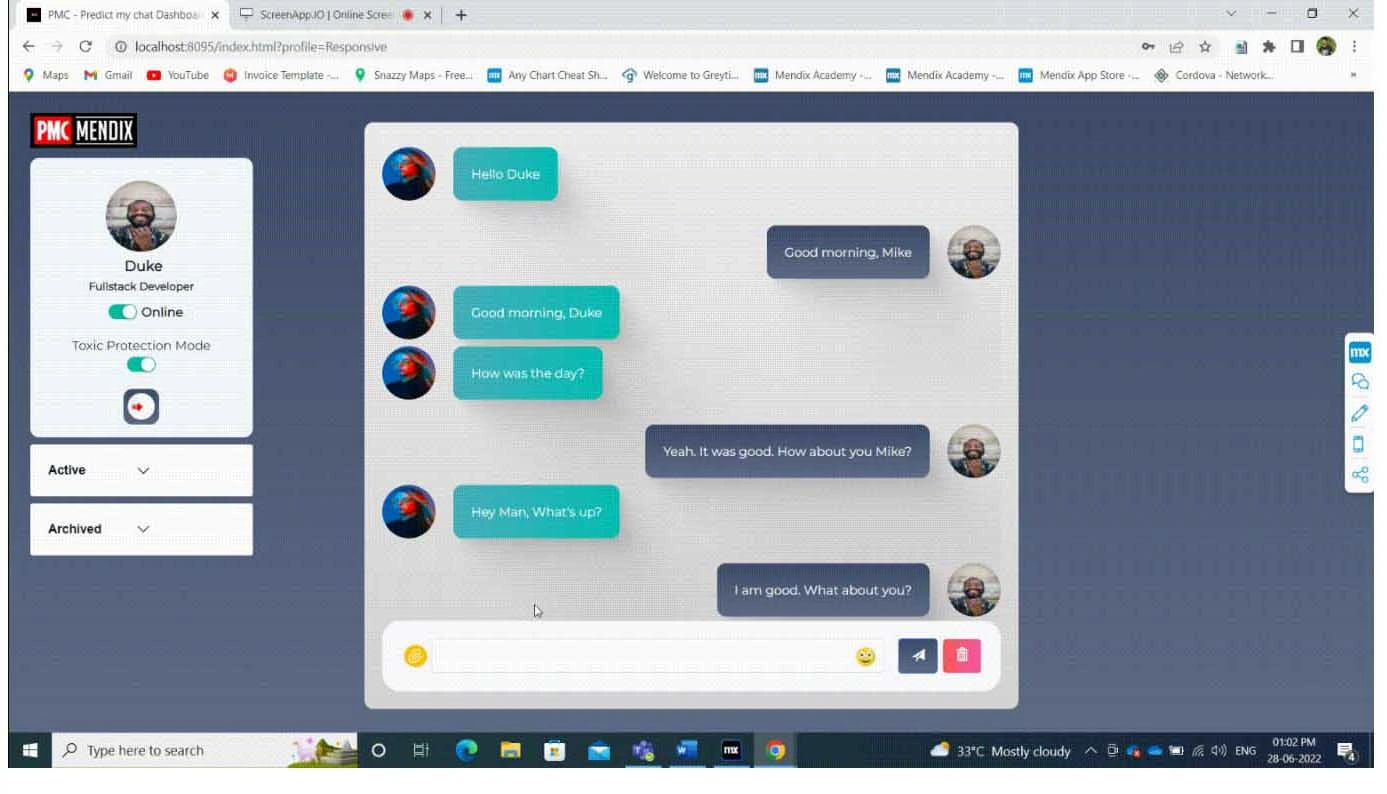

Here is my simple chat interface layout which I developed earlier. I am just calling the simple nanoflow in that send button to request our Perspective API to analyze whether the given chat is toxic or not.

This is what my nanoflow looks like. It’s very simple, isn’t it? 😉

Here is the interesting part for all the developers which is debugging the flow. On the previous Mendix version V8 and before, we didn’t have an option to debug the nanoflow. Like Thor came up with Storm breaker to fight Thanos, our Mendix team came up with Nanoflow debugger in Mendix 9 version.

As Mendix developers, we can feel it. This is what we expected for a long time and upvoted in our Mendix forum idea section.

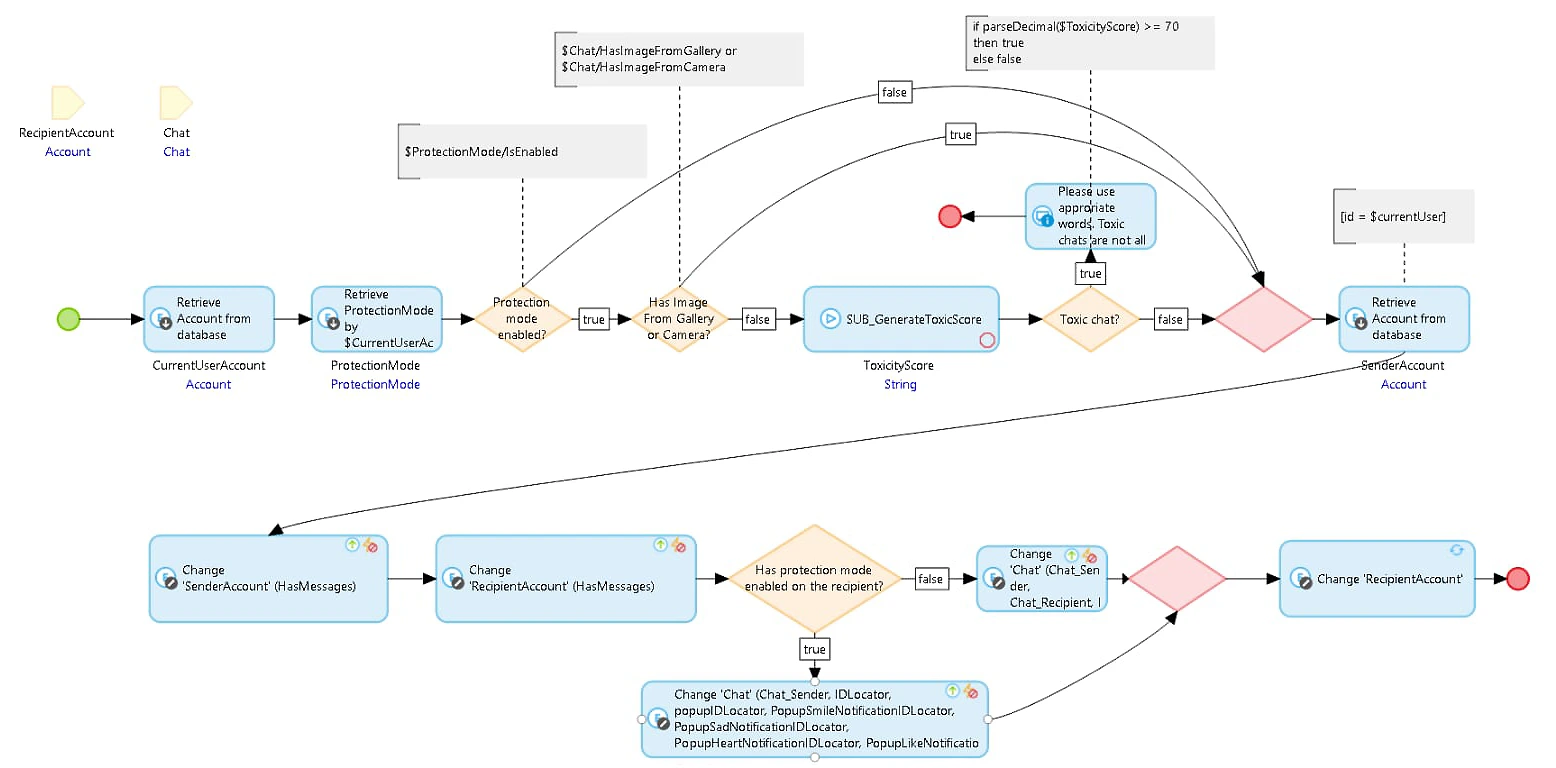

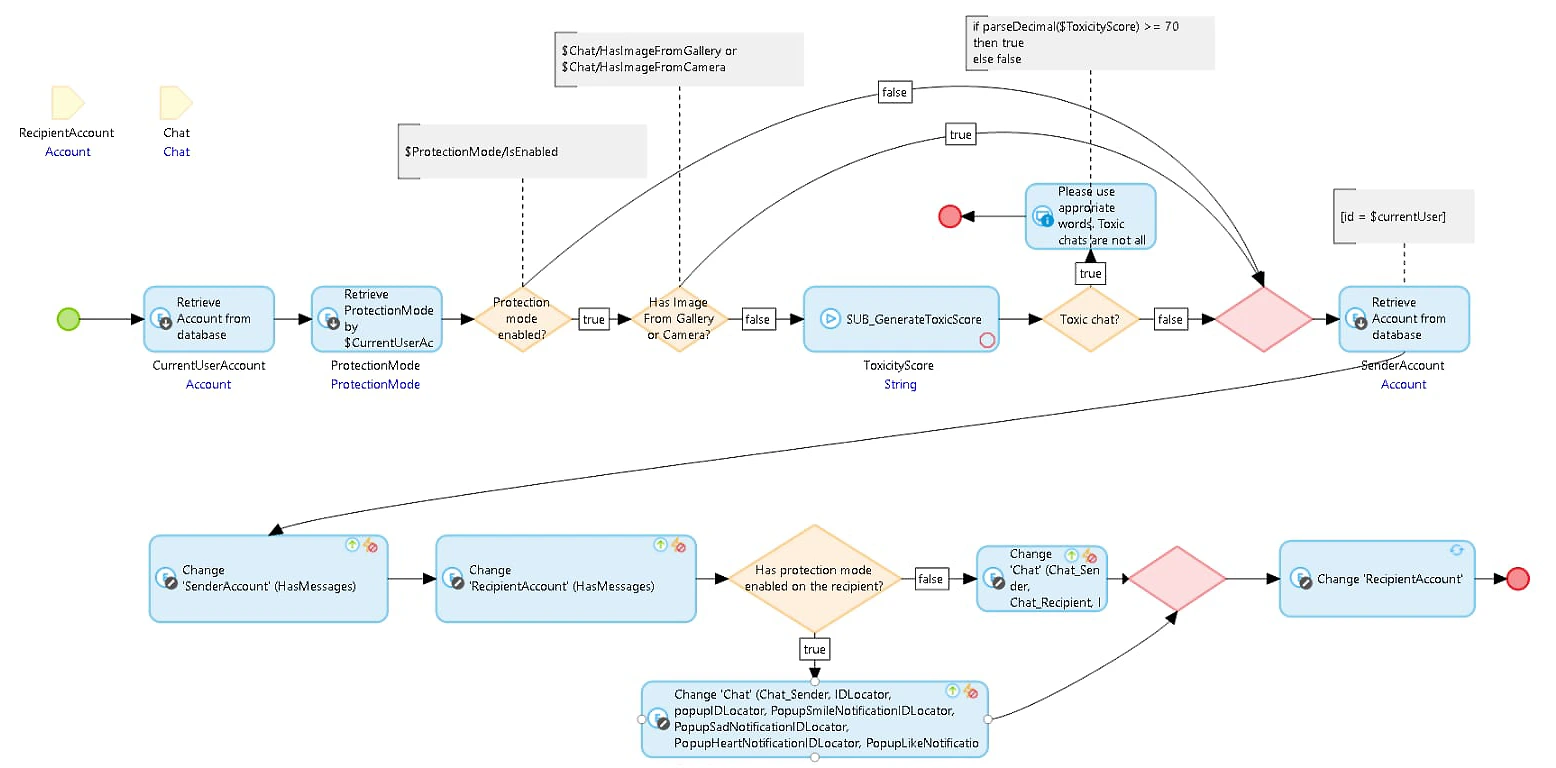

In this nanoflow, I am getting two input parameters which are the Recipient Account object and the Chat object.

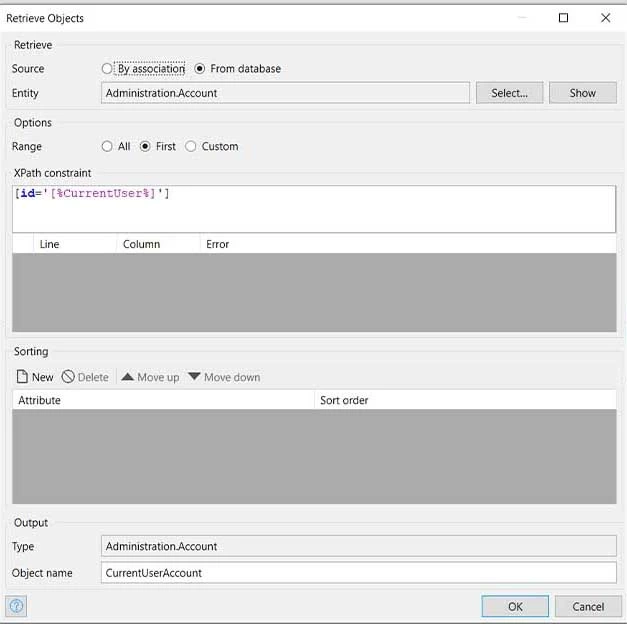

First, I am just retrieving the currently logged-in user account with the help of the Retrieve activity. I know this is not a best practice to use a System token. Instead of that, we can go with the Generalization approach to fetch the currently logged-in user account. I used this approach for the time-being.

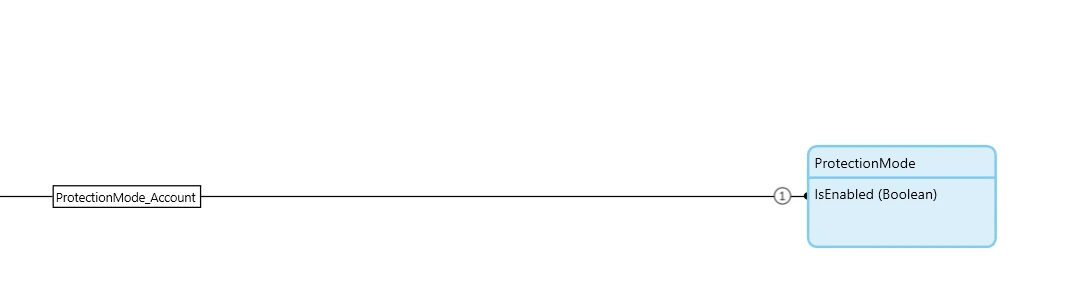

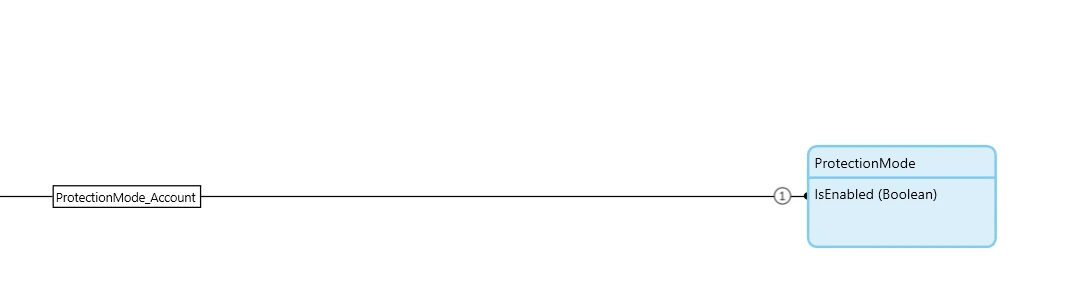

Next, we have one to one association between Protection mode and Account entity. In the Protection mode entity, we have one Boolean attribute to control whether we need to check for toxic comments or not with the help of this (IsEnabled) Boolean attribute. To improve the User experience perspective, we have given this control to the user. They can decide whether they can send a toxic message or not. But, on the other side, if recipients were enabled to this protection mode, they will not receive that toxic message. This is cool. Right?

In the next decision activity, I am checking whether the user enabled the protection mode or not. If it’s true, it will check whether the user is sending any image or text message with this chat with the help of the Boolean attribute. If it’s false, it will go to the next SUB_GenerateToxicScore sub-microflow call activity.

This is the microflow that will request our Perspective API. First, I’d check whether this chat has an Image or not. To avoid the REST Call error, I added this validation to send only text messages to this API call.

Our input body JSON structure will look like this below.

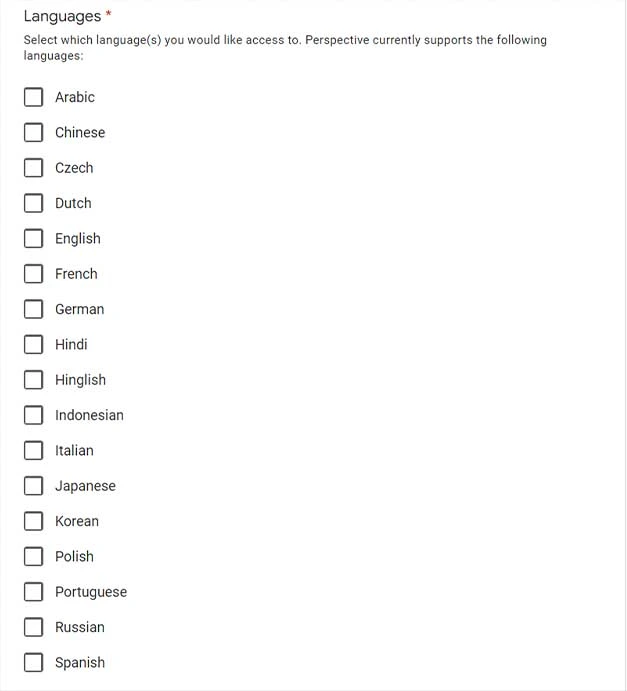

In the first comment object text attribute, we need to pass our text message to check whether it’s toxic or not. In the languages array, we need to mention the language code of the text message. Because Perspective API supports multi-languages like Arabic, Spanish, English, etc.

I have tested for the English language. Because I am unaware of any toxic words in any other language. Just kidding 😉.

We can leave the requested Attributes object for now. Once our body JSON is ready, we need to send this string variable to the next Call REST API activity.

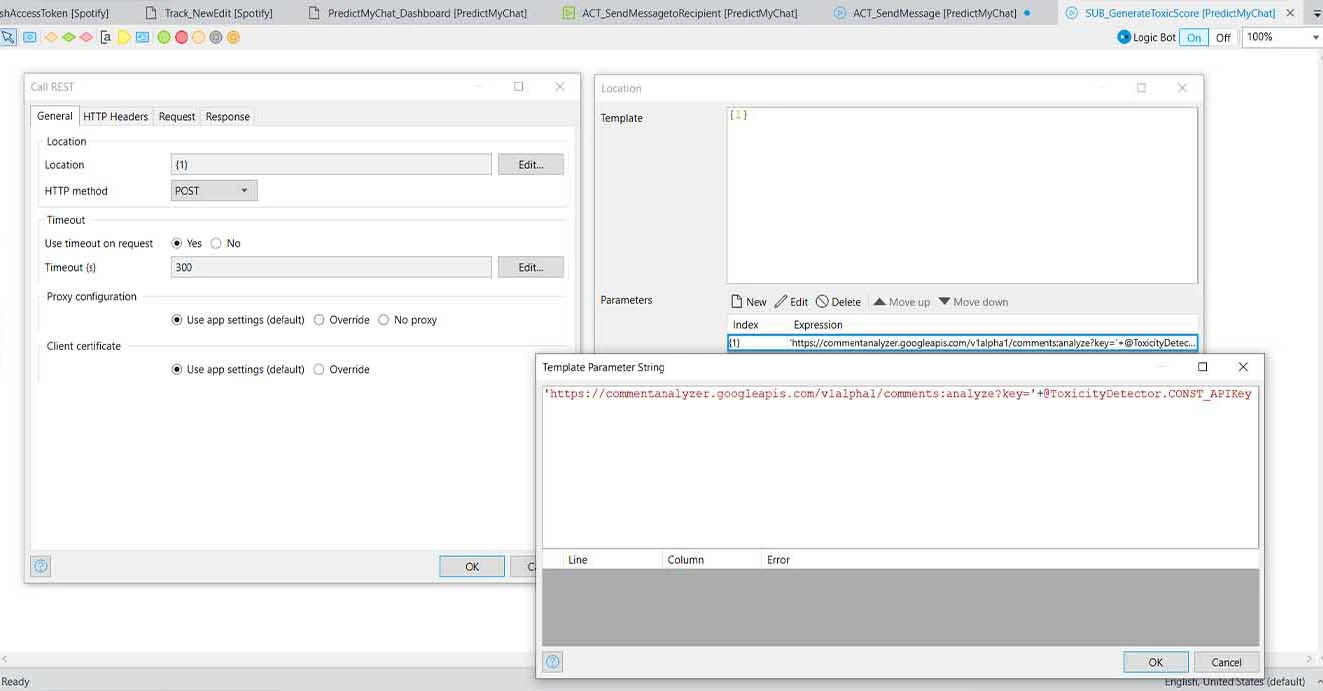

Perspective API endpoint: https://commentanalyzer.googleapis.com/v1alpha1/comments:analyze?key='+@ToxicityDetector.CONST_APIKey This is the endpoint we need to send our POST request to Perspective API with our API key. I have stored my API key in a constant variable (CONST_APIKey).

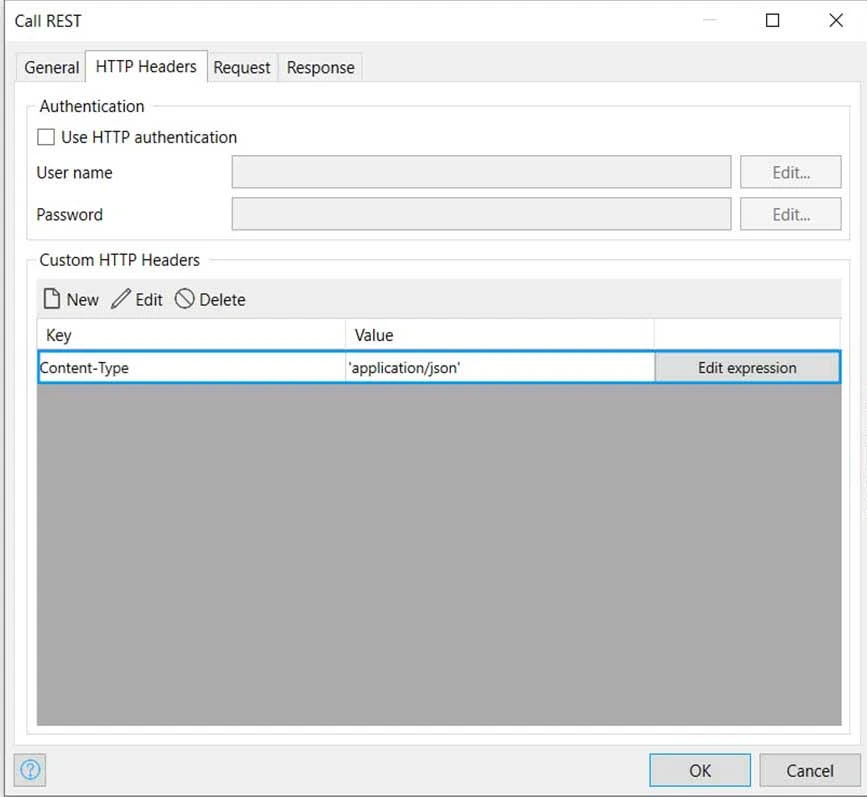

In the HTTP headers section, we need to add the Content-Type. In the previous section, we created our body JSON string variable. So, I mentioned the same JSON type in that input HTTP header.

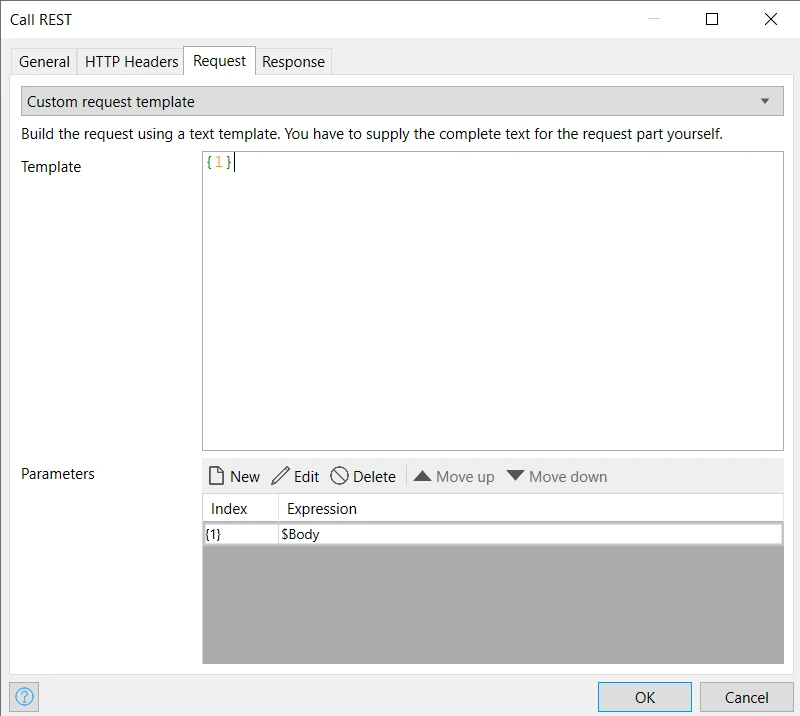

In the Request tab, I passed that body JSON string variable in the parameters section as below:

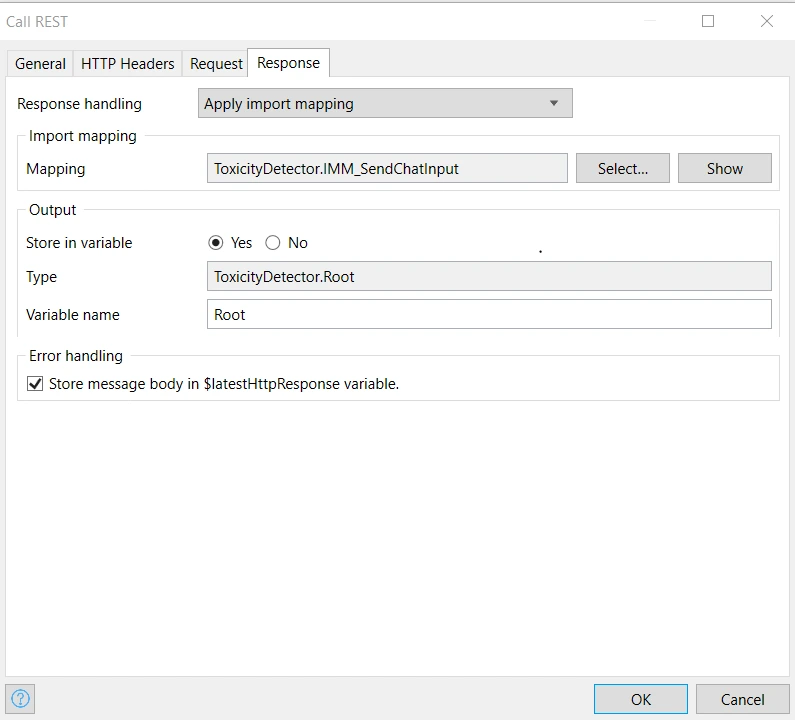

We have almost completed this configuration now. The final part is the Response section. We can use the Import Mapping process to get the response from Perspective API.

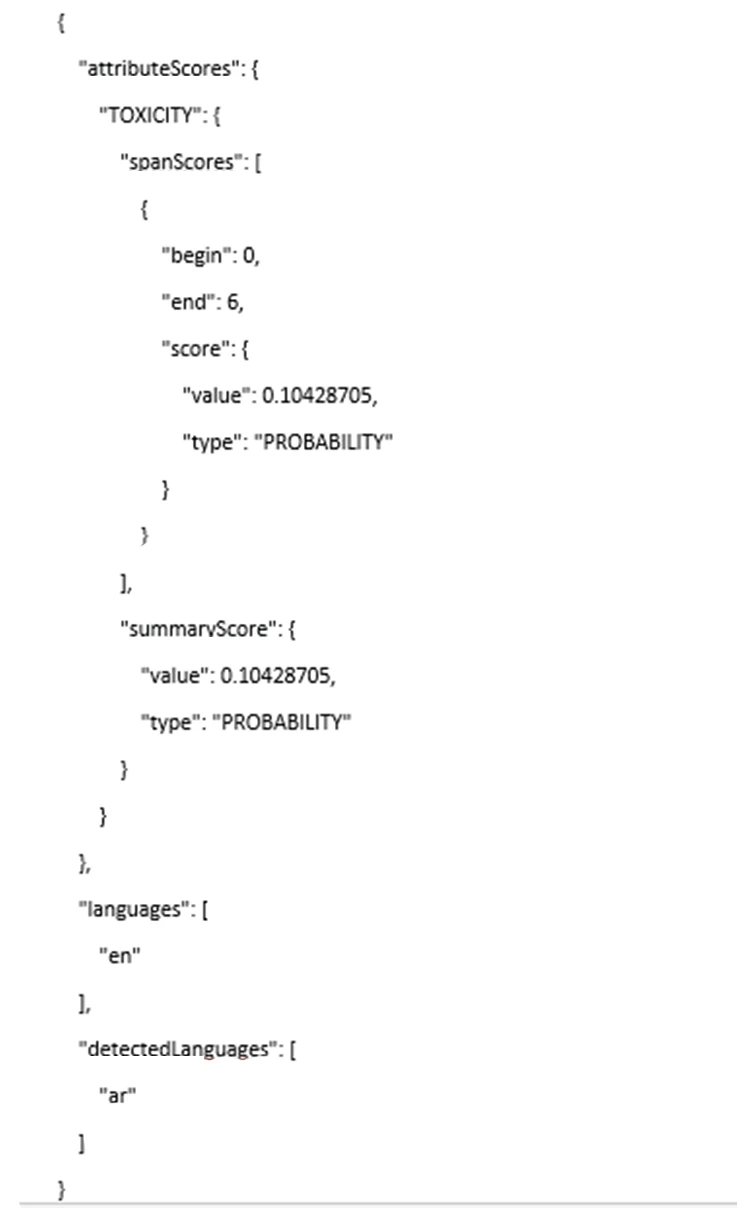

Perspective API response JSON structure will be like this below.

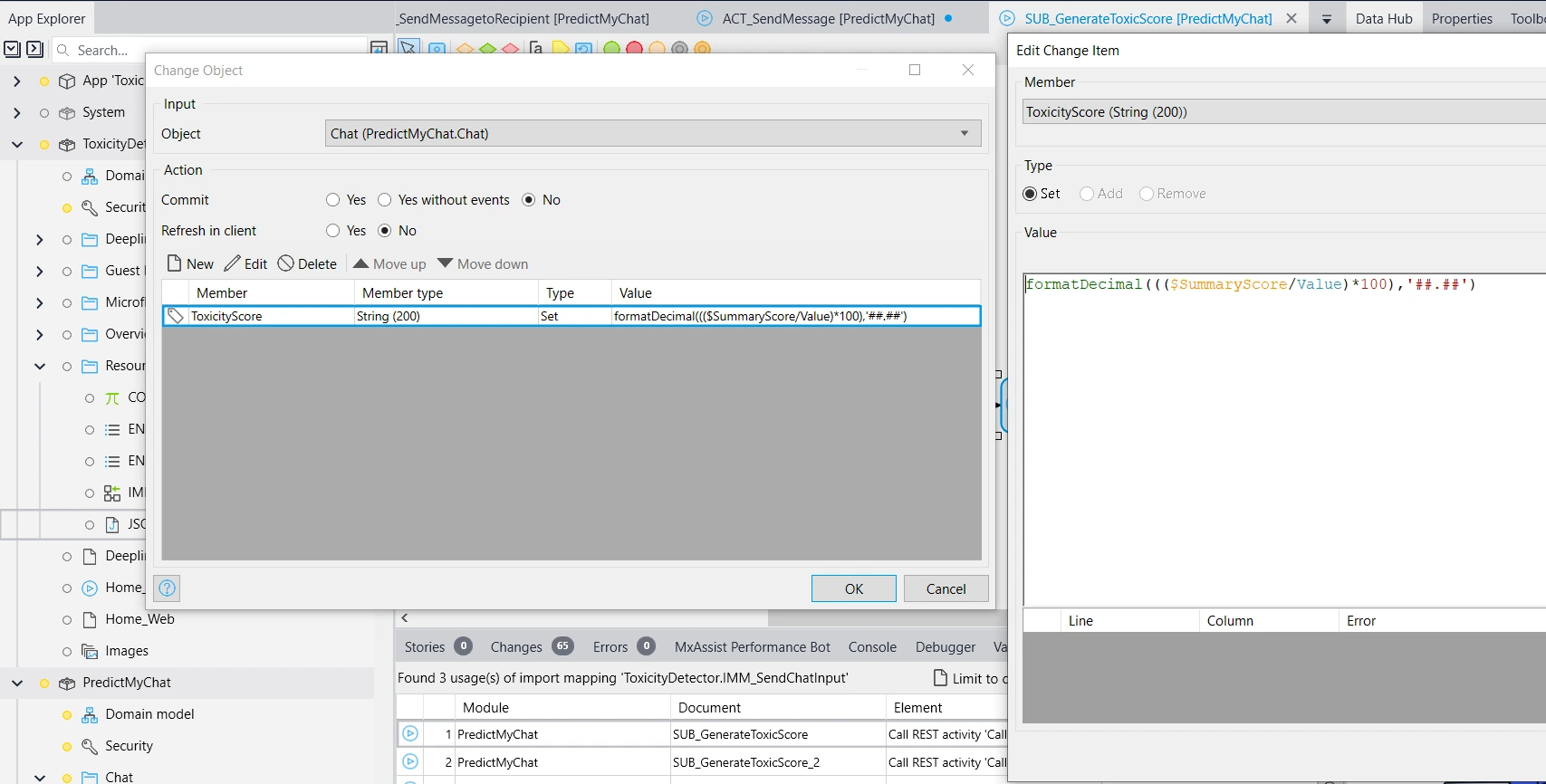

We can get the toxic score from the SummaryScore object value attribute. Once we get the toxic score from the SummaryScore object, we need to convert this value into percentage format using the formatDecimal function.

Once we return the toxic score from the Sub microflow, we can use this score to validate whether it’s exceeding the limit which we set on the decision activity. In this case, I have set 70 as a limit. If the returned toxic score is more than or equal to 70, we can consider that chat as toxic.

If the condition is true, instead of committing that chat object, we can show the message to the user to use appropriate words. If the condition is false, we can fetch the SenderAccount object using the Retrieve activity and map that object into the Chat_SenderAccount association. With this approach, we can block toxic comments or messages automatically.

Below, I have attached the Sample Chat interface application with a working demo. Here, Duke is trying to send a toxic message to Mike. But our Perspective API easily identifies that toxic chat with the help of machine learning models in the backend. The intended message will not be sent to Mike’s account.

Let's conclude! With the help of machine learning trained models, we will be able to identify toxic messages and can block it automatically. We can filter out and delete all toxic messages with a single click. It would be very helpful in scenarios like one-to-one conversations with strangers or group chat amongst communities.

Thanks for your precious time. I hope you guys like this blog, and it is helpful for your future projects. I will see you soon in another interesting blog. Have a nice day makers and go make it 😊!!